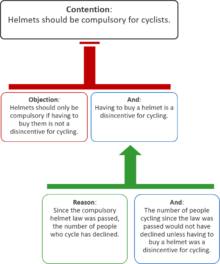

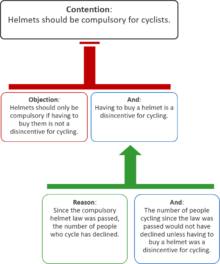

A schematic argument map showing a contention (or conclusion), supporting arguments and objections, and an inference objection.

In

informal logic and

philosophy, an

argument map or

argument diagram is a visual representation of the structure of an

argument. An argument map typically includes the key components of the argument, traditionally called the

conclusion and the

premises, also called

contention and

reasons.

[1] Argument maps can also show

co-premises,

objections,

counterarguments,

rebuttals, and

lemmas. There are different styles of argument map but they are often functionally equivalent and represent an argument's individual claims and the relationships between them.

Argument maps are commonly used in the context of teaching and applying

critical thinking.

[2] The purpose of mapping is to uncover the logical structure of arguments, identify unstated assumptions, evaluate the support an argument offers for a conclusion, and aid understanding of debates. Argument maps are often designed to support deliberation of issues, ideas and arguments in

wicked problems.

[3]

An argument map is not to be confused with a

concept map or a

mind map, two other kinds of

node–link diagram which have different constraints on nodes and links.

[4]

Key features of an argument map[edit]

A number of different kinds of argument map have been proposed but the most common, which Chris Reed and Glenn Rowe called the

standard diagram,

[5] consists of a

tree structure with each of the reasons leading to the conclusion. There is no consensus as to whether the conclusion should be at the top of the tree with the reasons leading up to it or whether it should be at the bottom with the reasons leading down to it.

[5] Another variation diagrams an argument from left to right.

[6]

According to

Doug Walton and colleagues, an argument map has two basic components: "One component is a set of circled numbers arrayed as points. Each number represents a proposition (premise or conclusion) in the argument being diagrammed. The other component is a set of lines or arrows joining the points. Each line (arrow) represents an inference. The whole network of points and lines represents a kind of overview of the reasoning in the given argument..."

[7] With the introduction of software for producing argument maps, it has become common for argument maps to consist of boxes containing the actual propositions rather than numbers referencing those propositions.

There is disagreement on the terminology to be used when describing argument maps,

[8] but the

standard diagram contains the following structures:

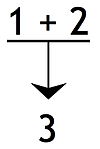

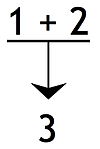

Dependent premises or

co-premises, where at least one of the joined premises requires another premise before it can give support to the conclusion: An argument with this structure has been called a

linked argument.

[9]

Statements 1 and 2 are dependent premises or co-premises

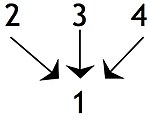

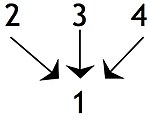

Independent premises, where the premise can support the conclusion on its own: Although independent premises may jointly make the conclusion more convincing, this is to be distinguished from situations where a premise gives no support unless it is joined to another premise. Where several premises or groups of premises lead to a final conclusion the argument might be described as

convergent. This is distinguished from a

divergent argument where a single premise might be used to support two separate conclusions.

[10]

Statements 2, 3, 4 are independent premises

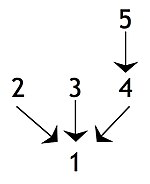

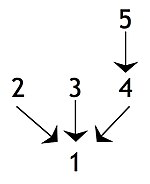

Intermediate conclusions or

sub-conclusions, where a claim is supported by another claim that is used in turn to support some further claim, i.e. the final conclusion or another intermediate conclusion: In the following diagram, statement

4 is an intermediate conclusion in that it is a conclusion in relation to statement

5 but is a premise in relation to the final conclusion, i.e. statement

1. An argument with this structure is sometimes called a

complex argument. If there is a single chain of claims containing at least one intermediate conclusion, the argument is sometimes described as a

serial argument or a

chain argument.

[11]

Statement 4 is an intermediate conclusion or sub-conclusion

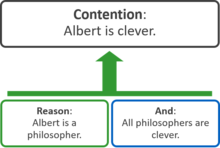

Each of these structures can be represented by the equivalent "box and line" approach to argument maps. In the following diagram, the

contention is shown at the top, and the boxes linked to it represent supporting

reasons, which comprise one or more

premises. The green arrow indicates that the two

reasons support the

contention:

Argument maps can also represent counterarguments. In the following diagram, the two

objections weaken the

contention, while the

reasons support the

premise of the objection:

A sample argument using objections

Representing an argument as an argument map[edit]

A written text can be transformed into an argument map by following a sequence of steps.

Monroe Beardsley's 1950 book

Practical Logic recommended the following procedure:

[12]

- Separate statements by brackets and number them.

- Put circles around the logical indicators.

- Supply, in parenthesis, any logical indicators that are left out.

- Set out the statements in a diagram in which arrows show the relationships between statements.

A diagram of the example from Beardsley's

Practical Logic

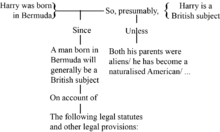

Beardsley gave the first example of a text being analysed in this way:

- Though ① [people who talk about the "social significance" of the arts don’t like to admit it], ② [music and painting are bound to suffer when they are turned into mere vehicles for propaganda]. For ③ [propaganda appeals to the crudest and most vulgar feelings]: (for) ④ [look at the academic monstrosities produced by the official Nazi painters]. What is more important, ⑤ [art must be an end in itself for the artist], because ⑥ [the artist can do the best work only in an atmosphere of complete freedom].

Beardsley said that the conclusion in this example is statement ②. Statement ④ needs to be rewritten as a declarative sentence, e.g. "Academic monstrosities [were] produced by the official Nazi painters." Statement ① points out that the conclusion isn't accepted by everyone, but statement ① is omitted from the diagram because it doesn't support the conclusion. Beardsley said that the logical relation between statement ③ and statement ④ is unclear, but he proposed to diagram statement ④ as supporting statement ③.

A box and line diagram of Beardsley's example, produced using Harrell's procedure

More recently, philosophy professor Maralee Harrell recommended the following procedure:

[13]

- Identify all the claims being made by the author.

- Rewrite them as independent statements, eliminating non-essential words.

- Identify which statements are premises, sub-conclusions, and the main conclusion.

- Provide missing, implied conclusions and implied premises. (This is optional depending on the purpose of the argument map.)

- Put the statements into boxes and draw a line between any boxes that are linked.

- Indicate support from premise(s) to (sub)conclusion with arrows.

Argument maps are useful not only for representing and analyzing existing writings, but also for thinking through issues as part of a

problem-structuring process or

writing process. The use of such argument analysis for thinking through issues has been called "reflective argumentation".

[14]

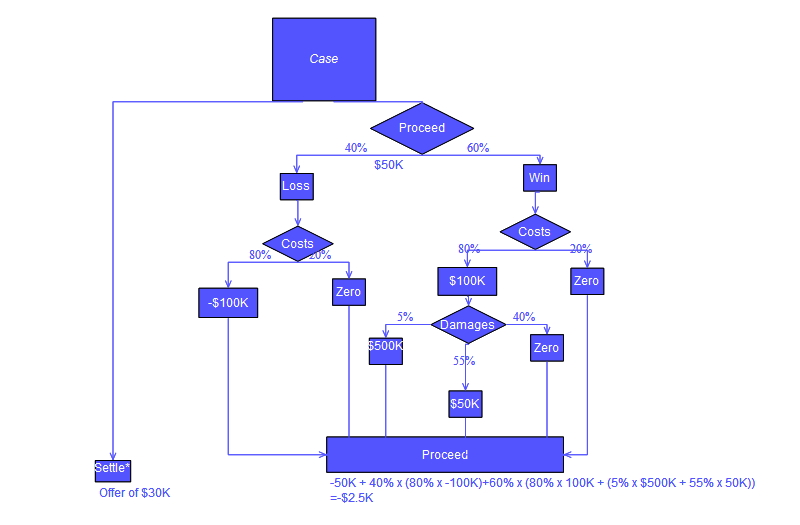

An argument map, unlike a

decision tree, does not tell how to make a decision, but the process of choosing a coherent position (or

reflective equilibrium) based on the structure of an argument map can be represented as a decision tree.

[15]

History[edit]

The philosophical origins and tradition of argument mapping[edit]

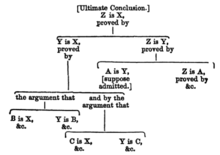

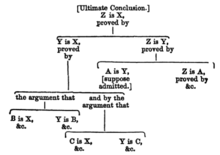

From Whately's Elements of Logic p467, 1852 edition

In the

Elements of Logic, which was published in 1826 and issued in many subsequent editions,

[16] Archbishop

Richard Whately gave probably the first form of an argument map, introducing it with the suggestion that "many students probably will find it a very clear and convenient mode of exhibiting the logical analysis of the course of argument, to draw it out in the form of a Tree, or Logical Division".

However, the technique did not become widely used, possibly because for complex arguments, it involved much writing and rewriting of the premises.

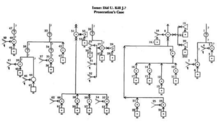

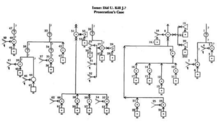

Wigmore evidence chart, from 1905

Legal philosopher and theorist

John Henry Wigmore produced maps of legal arguments using numbered premises in the early 20th century,

[17] based in part on the ideas of 19th century philosopher

Henry Sidgwick who used lines to indicate relations between terms.

[18]

Anglophone argument diagramming in the 20th century[edit]

Dealing with the failure of

formal reduction of informal argumentation, English speaking

argumentation theory developed diagrammatic approaches to informal reasoning over a period of fifty years.

Monroe Beardsley proposed a form of argument diagram in 1950.

[12] His method of marking up an argument and representing its components with linked numbers became a standard and is still widely used. He also introduced terminology that is still current describing

convergent,

divergent and

serial arguments.

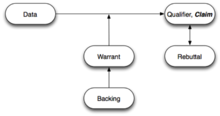

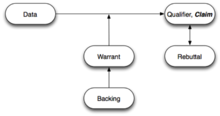

A generalised Toulmin diagram

Stephen Toulmin, in his groundbreaking and influential 1958 book

The Uses of Argument,

[19] identified several elements to an argument which have been generalized. The

Toulmin diagram is widely used in educational critical teaching.

[20][21] Whilst Toulmin eventually had a significant impact on the development of

informal logic he had little initial impact and the Beardsley approach to diagramming arguments along with its later developments became the standard approach in this field. Toulmin introduced something that was missing from Beardsley's approach. In Beardsley, "arrows link reasons and conclusions (but) no support is given to the implication itself between them. There is no theory, in other words, of inference distinguished from logical deduction, the passage is always deemed not controversial and not subject to support and evaluation".

[22] Toulmin introduced the concept of

warrant which "can be considered as representing the reasons behind the inference, the backing that authorizes the link".

[23]

Beardsley's approach was refined by Stephen N. Thomas, whose 1973 book

Practical Reasoning In Natural Language[24] introduced the term

linked to describe arguments where the premises necessarily worked together to support the conclusion.

[25] However, the actual distinction between dependent and independent premises had been made prior to this.

[25] The introduction of the linked structure made it possible for argument maps to represent missing or "hidden" premises. In addition, Thomas suggested showing reasons both

for and

against a conclusion with the reasons

against being represented by dotted arrows. Thomas introduced the term

argument diagram and defined

basic reasons as those that were not supported by any others in the argument and the

final conclusion as that which was not used to support any further conclusion.

Scriven's argument diagram. The explicit premise 1 is conjoined with additional unstated premises a and b to imply 2.

Michael Scriven further developed the Beardsley-Thomas approach in his 1976 book

Reasoning.

[26] Whereas Beardsley had said "At first, write out the statements...after a little practice, refer to the statements by number alone"

[27] Scriven advocated clarifying the meaning of the statements, listing them and then using a tree diagram with numbers to display the structure. Missing premises (unstated assumptions) were to be included and indicated with an alphabetical letter instead of a number to mark them off from the explicit statements. Scriven introduced counterarguments in his diagrams, which Toulmin had defined as rebuttal.

[28] This also enabled the diagramming of "balance of consideration" arguments.

[29]

In the 1990s,

Tim van Gelder and colleagues developed a series of computer software applications that permitted the premises to be fully stated and edited in the diagram, rather than in a legend.

[30] Van Gelder's first program, Reason!Able, was superseded by two subsequent programs, bCisive and Rationale.

[31]

Throughout the 1990s and 2000s, many other software applications were developed for argument visualization. By 2013, more than 60 such software systems existed.

[32] One of the differences between these software systems is whether collaboration is supported.

[33] Single-user argumentation systems include Convince Me, iLogos, LARGO, Athena,

Araucaria, and Carneades; small group argumentation systems include Digalo, QuestMap,

Compendium, Belvedere, and AcademicTalk; community argumentation systems include

Debategraph and

Collaboratorium.

[33] For more software examples, see:

§ External links.

In 1998 a series of large-scale argument maps released by

Robert E. Horn stimulated widespread interest in argument mapping.

[34]

Applications[edit]

Argument maps have been applied in many areas, but foremost in educational, academic and business settings, including

design rationale.

[35] Argument maps are also used in

forensic science,

[36] law, and

artificial intelligence.

[37] It has also been proposed that argument mapping has a great potential to improve how we understand and execute democracy, in reference to the ongoing evolution of

e-democracy.

[38]

Difficulties with the philosophical tradition[edit]

It has traditionally been hard to separate teaching critical thinking from the philosophical tradition of teaching

logic and method, and most critical thinking textbooks have been written by philosophers.

Informal logic textbooks are replete with philosophical examples, but it is unclear whether the approach in such textbooks transfers to non-philosophy students.

[20] There appears to be little statistical effect after such classes. Argument mapping, however, has a measurable effect according to many studies.

[39] For example, instruction in argument mapping has been shown to improve the critical thinking skills of business students.

[40]

Evidence that argument mapping improves critical thinking ability[edit]

There is empirical evidence that the skills developed in argument-mapping-based critical thinking courses substantially transfer to critical thinking done without argument maps. Alvarez's meta-analysis found that such critical thinking courses produced gains of around 0.70 SD, about twice as much as standard critical-thinking courses.

[41] The tests used in the reviewed studies were standard critical-thinking tests.

How argument mapping helps with critical thinking[edit]

The use of argument mapping has occurred within a number of disciplines, such as philosophy, management reporting, military and intelligence analysis, and public debates.

[35]

- Logical structure: Argument maps display an argument's logical structure more clearly than does the standard linear way of presenting arguments.

- Critical thinking concepts: In learning to argument map, students master such key critical thinking concepts as "reason", "objection", "premise", "conclusion", "inference", "rebuttal", "unstated assumption", "co-premise", "strength of evidence", "logical structure", "independent evidence", etc. Mastering such concepts is not just a matter of memorizing their definitions or even being able to apply them correctly; it is also understanding why the distinctions these words mark are important and using that understanding to guide one's reasoning.

- Visualization: Humans are highly visual and argument mapping may provide students with a basic set of visual schemas with which to understand argument structures.

- More careful reading and listening: Learning to argument map teaches people to read and listen more carefully, and highlights for them the key questions "What is the logical structure of this argument?" and "How does this sentence fit into the larger structure?" In-depth cognitive processing is thus more likely.

- More careful writing and speaking: Argument mapping helps people to state their reasoning and evidence more precisely, because the reasoning and evidence must fit explicitly into the map's logical structure.

- Literal and intended meaning: Often, many statements in an argument do not precisely assert what the author meant. Learning to argument map enhances the complex skill of distinguishing literal from intended meaning.

- Externalization: Writing something down and reviewing what one has written often helps reveal gaps and clarify one's thinking. Because the logical structure of argument maps is clearer than that of linear prose, the benefits of mapping will exceed those of ordinary writing.

- Anticipating replies: Important to critical thinking is anticipating objections and considering the plausibility of different rebuttals. Mapping develops this anticipation skill, and so improves analysis.

Standards[edit]

Argument Interchange Format[edit]

The Argument Interchange Format, AIF, is an international effort to develop a representational mechanism for exchanging argument resources between research groups, tools, and domains using a semantically rich language.

[42] AIF-RDF is the extended ontology represented in the

Resource Description Framework Schema (RDFS) semantic language. Though AIF is still something of a moving target, it is settling down.

[43]

Legal Knowledge Interchange Format[edit]

The Legal Knowledge Interchange Format (LKIF),

[44] developed in the European ESTRELLA project,

[45] is an XML schema for rules and arguments, designed with the goal of becoming a standard for representing and interchanging policy, legislation and cases, including their justificatory arguments, in the legal domain. LKIF builds on and uses the

Web Ontology Language (OWL) for representing concepts and includes a reusable basic ontology of legal concepts.

See also[edit]

- Jump up ^ Freeman 1991, pp. 49–90

- Jump up ^ For example: Davies 2012; Facione 2013, p. 86; Fisher 2004; Kelley 2014, p. 73; Kunsch, Schnarr & van Tyle 2014; Walton 2013, p. 10

- Jump up ^ For example: Culmsee & Awati 2013; Hoffmann & Borenstein 2013; Metcalfe & Sastrowardoyo 2013; Ricky Ohl, "Computer supported argument visualisation: modelling in consultative democracy around wicked problems", in Okada, Buckingham Shum & Sherborne 2014, pp. 361–380

- Jump up ^ For example: Davies 2010; Hunter 2008; Okada, Buckingham Shum & Sherborne 2014, pp. vii–x, 4

- ^ Jump up to: a b Reed & Rowe 2007, p. 64

- Jump up ^ For example: Walton 2013, pp. 18–20

- Jump up ^ Reed, Walton & Macagno 2007, p. 2

- Jump up ^ Freeman 1991, pp. 49–90; Reed & Rowe 2007

- Jump up ^ Harrell 2010, p. 19

- Jump up ^ Freeman 1991, pp. 91–110; Harrell 2010, p. 20

- Jump up ^ Beardsley 1950, pp. 18–19; Reed, Walton & Macagno 2007, pp. 3–8; Harrell 2010, pp. 19–21

- ^ Jump up to: a b Beardsley 1950

- Jump up ^ Harrell 2010, p. 28

- Jump up ^ For example: Hoffmann & Borenstein 2013; Hoffmann 2016

- Jump up ^ See section 4.2, "Argument maps as reasoning tools", in Brun & Betz 2016

- Jump up ^ Whately 1834 (first published 1826)

- Jump up ^ Wigmore 1913

- Jump up ^ Goodwin 2000

- Jump up ^ Toulmin 2003 (first published 1958)

- ^ Jump up to: a b Simon, Erduran & Osborne 2006

- Jump up ^ Böttcher & Meisert 2011; Macagno & Konstantinidou 2013

- Jump up ^ Reed, Walton & Macagno 2007, p. 8

- Jump up ^ Reed, Walton & Macagno 2007, p. 9

- Jump up ^ Thomas 1997 (first published 1973)

- ^ Jump up to: a b Snoeck Henkemans 2000, p. 453

- Jump up ^ Scriven 1976

- Jump up ^ Beardsley 1950, p. 21

- Jump up ^ Reed, Walton & Macagno 2007, pp. 10–11

- Jump up ^ van Eemeren et al. 1996, p. 175

- Jump up ^ van Gelder 2007

- Jump up ^ Berg et al. 2009

- Jump up ^ Walton 2013, p. 11

- ^ Jump up to: a b Scheuer et al. 2010

- Jump up ^ Holmes 1999; Horn 1998 and Robert E. Horn, "Infrastructure for navigating interdisciplinary debates: critical decisions for representing argumentation", in Kirschner, Buckingham Shum & Carr 2003, pp. 165–184

- ^ Jump up to: a b Kirschner, Buckingham Shum & Carr 2003; Okada, Buckingham Shum & Sherborne 2014

- Jump up ^ For example: Bex 2011

- Jump up ^ For example: Verheij 2005; Reed, Walton & Macagno 2007; Walton 2013

- Jump up ^ Hilbert 2009

- Jump up ^ Twardy 2004; Álvarez Ortiz 2007; Harrell 2008; Yanna Rider and Neil Thomason, "Cognitive and pedagogical benefits of argument mapping: LAMP guides the way to better thinking", in Okada, Buckingham Shum & Sherborne 2014, pp. 113–134; Dwyer 2011; Davies 2012

- Jump up ^ Carrington et al. 2011; Kunsch, Schnarr & van Tyle 2014

- Jump up ^ Álvarez Ortiz 2007, pp. 69–70 et seq

- Jump up ^ See the AIF original draft description (2006) and the full AIF-RDF ontology specifications in RDFS format.

- Jump up ^ Bex et al. 2013

- Jump up ^ Boer, Winkels & Vitali 2008

- Jump up ^ "Estrella project website". estrellaproject.org. Archived from the original on 2016-02-12. Retrieved 2016-02-24.

References[edit]

- Álvarez Ortiz, Claudia María (2007). Does philosophy improve critical thinking skills? (PDF) (M.A. thesis). Department of Philosophy, University of Melbourne. OCLC 271475715. Archived from the original (PDF) on 2012-07-08.

- Beardsley, Monroe C. (1950). Practical logic. New York: Prentice-Hall. OCLC 4318971.

- Berg, Timo ter; van Gelder, Tim; Patterson, Fiona; Teppema, Sytske (2009). Critical thinking: reasoning and communicating with Rationale. Amsterdam: Pearson Education Benelux. ISBN 9043018015. OCLC 301884530.

- Bex, Floris J. (2011). Arguments, stories and criminal evidence: a formal hybrid theory. Law and philosophy library. 92. Dordrecht; New York: Springer. ISBN 9789400701397. OCLC 663950184. doi:10.1007/978-94-007-0140-3.

- Bex, Floris J.; Modgil, Sanjay; Prakken, Henry; Reed, Chris (2013). "On logical specifications of the Argument Interchange Format" (PDF). Journal of Logic and Computation. 23 (5): 951–989. doi:10.1093/logcom/exs033.

- Boer, Alexander; Winkels, Radboud; Vitali, Fabio (2008). "MetaLex XML and the Legal Knowledge Interchange Format" (PDF). In Casanovas, Pompeu; Sartor, Giovanni; Casellas, Núria; Rubino, Rossella. Computable models of the law: languages, dialogues, games, ontologies. Lecture notes in computer science. 4884. Berlin; New York: Springer. pp. 21–41. ISBN 9783540855682. OCLC 244765580. doi:10.1007/978-3-540-85569-9_2. Retrieved 2016-02-24.

- Böttcher, Florian; Meisert, Anke (February 2011). "Argumentation in science education: a model-based framework". Science & Education. 20 (2): 103–140. doi:10.1007/s11191-010-9304-5.

- Brun, Georg; Betz, Gregor (2016). "Analysing practical argumentation" (PDF). In Hansson, Sven Ove; Hirsch Hadorn, Gertrude. The argumentative turn in policy analysis: reasoning about uncertainty. Logic, argumentation & reasoning. 10. Cham; New York: Springer-Verlag. pp. 39–77. ISBN 9783319305479. OCLC 950884495. doi:10.1007/978-3-319-30549-3_3.

- Carrington, Michal; Chen, Richard; Davies, Martin; Kaur, Jagjit; Neville, Benjamin (June 2011). "The effectiveness of a single intervention of computer‐aided argument mapping in a marketing and a financial accounting subject" (PDF). Higher Education Research & Development. 30 (3): 387–403. doi:10.1080/07294360.2011.559197. Retrieved 2016-02-24.

- Culmsee, Paul; Awati, Kailash (2013) [2011]. "Chapter 7: Visualising reasoning, and Chapter 8: Argumentation-based rationale". The heretic's guide to best practices: the reality of managing complex problems in organisations. Bloomington, IN: iUniverse, Inc. pp. 153–211. ISBN 9781462058549. OCLC 767703320.

- Davies, W. Martin (November 2010). "Concept mapping, mind mapping and argument mapping: what are the differences and do they matter?". Higher Education. 62 (3): 279–301. doi:10.1007/s10734-010-9387-6.

- Davies, W. Martin (Summer 2012). "Computer-aided argument mapping as a tool for teaching critical thinking". International Journal of Learning and Media. 4 (3-4): 79–84. doi:10.1162/IJLM_a_00106.

- Dwyer, Christopher Peter (2011). The evaluation of argument mapping as a learning tool (PDF) (Ph.D. thesis). School of Psychology, National University of Ireland, Galway. OCLC 812818648. Retrieved 2016-02-24.

- van Eemeren, Frans H.; Grootendorst, Rob; Snoeck Henkemans, A. Francisca; Blair, J. Anthony; Johnson, Ralph H.; Krabbe, Erik C. W.; Plantin, Christian; Walton, Douglas N.; Willard, Charles A.; Woods, John (1996). Fundamentals of argumentation theory: a handbook of historical backgrounds and contemporary developments. Mahwah, NJ: Lawrence Erlbaum Associates. ISBN 0805818618. OCLC 33970847. doi:10.4324/9780203811306.

- Facione, Peter A. (2013) [2011]. THINK critically (2nd ed.). Boston: Pearson. ISBN 0205490980. OCLC 770694200.

- Fisher, Alec (2004) [1988]. The logic of real arguments (2nd ed.). Cambridge; New York: Cambridge University Press. ISBN 0521654815. OCLC 54400059. doi:10.1017/CBO9780511818455. Retrieved 2016-02-24.

- Freeman, James B. (1991). Dialectics and the macrostructure of arguments: a theory of argument structure. Berlin; New York: Foris Publications. ISBN 3110133903. OCLC 24429943. Retrieved 2016-02-24.

- van Gelder, Tim (2007). "The rationale for Rationale" (PDF). Law, Probability and Risk. 6 (1-4): 23–42. doi:10.1093/lpr/mgm032.

- Goodwin, Jean (2000). "Wigmore's chart method". Informal Logic. 20 (3): 223–243.

- Harrell, Maralee (December 2008). "No computer program required: even pencil-and-paper argument mapping improves critical-thinking skills" (PDF). Teaching Philosophy. 31 (4): 351–374. doi:10.5840/teachphil200831437. Archived from the original (PDF) on 2013-12-29.

- Harrell, Maralee (August 2010). "Creating argument diagrams". academia.edu.

- Hilbert, Martin (April 2009). "The maturing concept of e-democracy: from e-voting and online consultations to democratic value out of jumbled online chatter" (PDF). Journal of Information Technology and Politics. 6 (2): 87–110. doi:10.1080/19331680802715242.

- Hoffmann, Michael H. G. (November 2016). "Reflective argumentation: a cognitive function of arguing". Argumentation. 30 (4): 365–397. doi:10.1007/s10503-015-9388-9.

- Hoffmann, Michael H. G.; Borenstein, Jason (February 2013). "Understanding ill-structured engineering ethics problems through a collaborative learning and argument visualization approach". Science and Engineering Ethics. 20 (1): 261–276. PMID 23420467. doi:10.1007/s11948-013-9430-y.

- Holmes, Bob (10 July 1999). "Beyond words". New Scientist (2194). Archived from the original on 28 September 2008.

- Horn, Robert E. (1998). Visual language: global communication for the 21st century. Bainbridge Island, WA: MacroVU, Inc. ISBN 189263709X. OCLC 41138655.

- Hunter, Lawrie (2008). "Cmap linking phrase constraint for the structural narrowing of constructivist second language tasks" (PDF). In Cañas, Alberto J.; Reiska, Priit; Åhlberg, Mauri K.; Novak, Joseph Donald. CMC 2008: Third International Conference on Concept Mapping, Tallinn, Estonia and Helsinki, Finland, 22–25 September 2008: proceedings. 1. Tallinn: Tallinn University. pp. 146–151. ISBN 9789985585849.

- Kelley, David (2014) [1988]. The art of reasoning: an introduction to logic and critical thinking (4th ed.). New York: W. W. Norton & Company. ISBN 0393930785. OCLC 849801096.

- Kirschner, Paul Arthur; Buckingham Shum, Simon J; Carr, Chad S, eds. (2003). Visualizing argumentation: software tools for collaborative and educational sense-making. Computer supported cooperative work. New York: Springer. ISBN 1852336641. OCLC 50676911. doi:10.1007/978-1-4471-0037-9. Retrieved 2016-02-24.

- Kunsch, David W.; Schnarr, Karin; van Tyle, Russell (November 2014). "The use of argument mapping to enhance critical thinking skills in business education". Journal of Education for Business. 89 (8): 403–410. doi:10.1080/08832323.2014.925416.

- Macagno, Fabrizio; Konstantinidou, Aikaterini (August 2013). "What students' arguments can tell us: using argumentation schemes in science education". Argumentation. 27 (3): 225–243. SSRN 2185945

. doi:10.1007/s10503-012-9284-5.

. doi:10.1007/s10503-012-9284-5.

- Metcalfe, Mike; Sastrowardoyo, Saras (November 2013). "Complex project conceptualisation and argument mapping". International Journal of Project Management. 31 (8): 1129–1138. doi:10.1016/j.ijproman.2013.01.004.

- Okada, Alexandra; Buckingham Shum, Simon J; Sherborne, Tony, eds. (2014) [2008]. Knowledge cartography: software tools and mapping techniques. Advanced information and knowledge processing (2nd ed.). New York: Springer. ISBN 9781447164692. OCLC 890438015. doi:10.1007/978-1-4471-6470-8. Retrieved 2016-02-24.

- Reed, Chris; Rowe, Glenn (2007). "A pluralist approach to argument diagramming" (PDF). Law, Probability and Risk. 6 (1-4): 59–85. doi:10.1093/lpr/mgm030. Retrieved 2016-02-24.

- Reed, Chris; Walton, Douglas; Macagno, Fabrizio (March 2007). "Argument diagramming in logic, law and artificial intelligence". The Knowledge Engineering Review. 22 (1): 1–22. doi:10.1017/S0269888907001051.

- Scheuer, Oliver; Loll, Frank; Pinkwart, Niels; McLaren, Bruce M. (2010). "Computer-supported argumentation: a review of the state of the art" (PDF). International Journal of Computer-Supported Collaborative Learning. 5 (1): 43–102. doi:10.1007/s11412-009-9080-x.

- Scriven, Michael (1976). Reasoning. New York: McGraw-Hill. ISBN 0070558825. OCLC 2800373.

- Simon, Shirley; Erduran, Sibel; Osborne, Jonathan (2006). "Learning to teach argumentation: research and development in the science classroom" (PDF). International Journal of Science Education. 28 (2-3): 235–260. doi:10.1080/09500690500336957.

- Snoeck Henkemans, A. Francisca (November 2000). "State-of-the-art: the structure of argumentation". Argumentation. 14 (4): 447–473. doi:10.1023/A:1007800305762.

- Thomas, Stephen N. (1997) [1973]. Practical reasoning in natural language (4th ed.). Upper Saddle River, NJ: Prentice-Hall. ISBN 0136782698. OCLC 34745923.

- Toulmin, Stephen E. (2003) [1958]. The uses of argument (Updated ed.). Cambridge; New York: Cambridge University Press. ISBN 0521534836. OCLC 57253830. doi:10.1017/CBO9780511840005. Retrieved 2016-02-24.

- Twardy, Charles R. (June 2004). "Argument maps improve critical thinking" (PDF). Teaching Philosophy. 27 (2): 95–116. doi:10.5840/teachphil200427213.

- Verheij, Bart (2005). Virtual arguments: on the design of argument assistants for lawyers and other arguers. Information technology & law series. 6. The Hague: T.M.C. Asser Press. ISBN 9789067041904. OCLC 59617214.

- Walton, Douglas N. (2013). Methods of argumentation. Cambridge; New York: Cambridge University Press. ISBN 1107677335. OCLC 830523850. doi:10.1017/CBO9781139600187. Retrieved 2016-02-24.

- Whately, Richard (1834) [1826]. Elements of logic: comprising the substance of the article in the Encyclopædia metropolitana: with additions, &c. (5th ed.). London: B. Fellowes. OCLC 1739330. Retrieved 2016-02-24.

- Wigmore, John Henry (1913). The principles of judicial proof: as given by logic, psychology, and general experience, and illustrated in judicial trials. Boston: Little Brown. OCLC 1938596. Retrieved 2016-02-24.

Further reading[edit]

-

- Facione, Peter A.; Facione, Noreen C. (2007). Thinking and reasoning in human decision making: the method of argument and heuristic analysis. Milbrae, CA: California Academic Press. ISBN 1891557580. OCLC 182039452.

- van Gelder, Tim (17 February 2009). "What is argument mapping?". timvangelder.com. Retrieved 12 January 2015.

- van Gelder, Tim (2015). "Using argument mapping to improve critical thinking skills". In Davies, Martin; Barnett, Ronald. The Palgrave handbook of critical thinking in higher education. New York: Palgrave Macmillan. pp. 183–192. ISBN 9781137378033. OCLC 894935460. doi:10.1057/9781137378057_12.

- Harrell, Maralee (June 2005). "Using argument diagramming software in the classroom" (PDF). Teaching Philosophy. 28 (2): 163–177. doi:10.5840/teachphil200528222. Archived from the original (PDF) on 2006-09-07.

- Schneider, Jodi; Groza, Tudor; Passant, Alexandre (April 2013). "A review of argumentation for the social semantic web" (PDF). Semantic Web. 4 (2): 159–218.

External links[edit]

Argument mapping software[edit]

Online, collaborative software[edit]

- AGORA-net (user interface in English, German, Spanish, Chinese, and Russian)

- Arguman (Open source, English, Turkish, and Chinese)

- bCisiveOnline

- Carneades (open source, argument (re)construction, evaluation, mapping and interchange)

- Debategraph

- Deliberatorium

- TruthMapping