From Wikipedia, the free encyclopedia/Blogger Ref http://www.p2pfoundation.net/Universal-Debating_Project

Confirmation bias, also called myside bias, is the tendency to search for, interpret, favor, and recall information in a way that confirms one's beliefs or hypotheses.[Note 1][1] It is a type of cognitive bias and a systematic error of inductive reasoning. People display this bias when they gather or remember information selectively, or when they interpret it in a biased way. The effect is stronger for emotionally charged issues and for deeply entrenched beliefs. People also tend to interpret ambiguous evidence as supporting their existing position. Biased search, interpretation and memory have been invoked to explain attitude polarization (when a disagreement becomes more extreme even though the different parties are exposed to the same evidence), belief perseverance (when beliefs persist after the evidence for them is shown to be false), the irrational primacy effect (a greater reliance on information encountered early in a series) and illusory correlation (when people falsely perceive an association between two events or situations).

A series of experiments in the 1960s suggested that people are biased toward confirming their existing beliefs. Later work re-interpreted these results as a tendency to test ideas in a one-sided way, focusing on one possibility and ignoring alternatives. In certain situations, this tendency can bias people's conclusions. Explanations for the observed biases include wishful thinking and the limited human capacity to process information. Another explanation is that people show confirmation bias because they are weighing up the costs of being wrong, rather than investigating in a neutral, scientific way.

Confirmation biases contribute to overconfidence in personal beliefs and can maintain or strengthen beliefs in the face of contrary evidence. Poor decisions due to these biases have been found in political and organizational contexts.[2][3][Note 2]

Some psychologists restrict the term confirmation bias to selective collection of evidence that supports what one already believes while ignoring or rejecting evidence that supports a different conclusion. Other psychologists apply the term more broadly to the tendency to preserve one's existing beliefs when searching for evidence, interpreting it, or recalling it from memory.[5][Note 3]

Experiments have found repeatedly that people tend to test hypotheses in a one-sided way, by searching for evidence consistent with their current hypothesis.[7][8] Rather than searching through all the relevant evidence, they phrase questions to receive an affirmative answer that supports their hypothesis.[9] They look for the consequences that they would expect if their hypothesis were true, rather than what would happen if it were false.[9] For example, someone using yes/no questions to find a number he or she suspects to be the number 3 might ask, "Is it an odd number?" People prefer this type of question, called a "positive test", even when a negative test such as "Is it an even number?" would yield exactly the same information.[10] However, this does not mean that people seek tests that guarantee a positive answer. In studies where subjects could select either such pseudo-tests or genuinely diagnostic ones, they favored the genuinely diagnostic.[11][12]

The preference for positive tests in itself is not a bias, since positive tests can be highly informative.[13] However, in combination with other effects, this strategy can confirm existing beliefs or assumptions, independently of whether they are true.[14] In real-world situations, evidence is often complex and mixed. For example, various contradictory ideas about someone could each be supported by concentrating on one aspect of his or her behavior.[8] Thus any search for evidence in favor of a hypothesis is likely to succeed.[14] One illustration of this is the way the phrasing of a question can significantly change the answer.[8] For example, people who are asked, "Are you happy with your social life?" report greater satisfaction than those asked, "Are you unhappy with your social life?"[15]

Even a small change in a question's wording can affect how people search through available information, and hence the conclusions they reach. This was shown using a fictional child custody case.[16] Participants read that Parent A was moderately suitable to be the guardian in multiple ways. Parent B had a mix of salient positive and negative qualities: a close relationship with the child but a job that would take him or her away for long periods of time. When asked, "Which parent should have custody of the child?" the majority of participants chose Parent B, looking mainly for positive attributes. However, when asked, "Which parent should be denied custody of the child?" they looked for negative attributes and the majority answered that Parent B should be denied custody, implying that Parent A should have custody.[16]

Similar studies have demonstrated how people engage in a biased search for information, but also that this phenomenon may be limited by a preference for genuine diagnostic tests. In an initial experiment, participants rated another person on the introversion–extroversion personality dimension on the basis of an interview. They chose the interview questions from a given list. When the interviewee was introduced as an introvert, the participants chose questions that presumed introversion, such as, "What do you find unpleasant about noisy parties?" When the interviewee was described as extroverted, almost all the questions presumed extroversion, such as, "What would you do to liven up a dull party?" These loaded questions gave the interviewees little or no opportunity to falsify the hypothesis about them.[17] A later version of the experiment gave the participants less presumptive questions to choose from, such as, "Do you shy away from social interactions?"[18] Participants preferred to ask these more diagnostic questions, showing only a weak bias towards positive tests. This pattern, of a main preference for diagnostic tests and a weaker preference for positive tests, has been replicated in other studies.[18]

Personality traits influence and interact with biased search processes.[19] Individuals vary in their abilities to defend their attitudes from external attacks in relation to selective exposure. Selective exposure occurs when individuals search for information that is consistent, rather than inconsistent, with their personal beliefs.[20] An experiment examined the extent to which individuals could refute arguments that contradicted their personal beliefs.[19] People with high confidence levels more readily seek out contradictory information to their personal position to form an argument. Individuals with low confidence levels do not seek out contradictory information and prefer information that supports their personal position. People generate and evaluate evidence in arguments that are biased towards their own beliefs and opinions.[21] Heightened confidence levels decrease preference for information that supports individuals' personal beliefs.

Another experiment gave participants a complex rule-discovery task that involved moving objects simulated by a computer.[22] Objects on the computer screen followed specific laws, which the participants had to figure out. So, participants could "fire" objects across the screen to test their hypotheses. Despite making many attempts over a ten-hour session, none of the participants figured out the rules of the system. They typically attempted to confirm rather than falsify their hypotheses, and were reluctant to consider alternatives. Even after seeing objective evidence that refuted their working hypotheses, they frequently continued doing the same tests. Some of the participants were taught proper hypothesis-testing, but these instructions had almost no effect.[22]

Confirmation biases are not limited to the collection of evidence. Even if two individuals have the same information, the way they interpret it can be biased.

A team at Stanford University conducted an experiment involving participants who felt strongly about capital punishment, with half in favor and half against it.[24][25] Each participant read descriptions of two studies: a comparison of U.S. states with and without the death penalty, and a comparison of murder rates in a state before and after the introduction of the death penalty. After reading a quick description of each study, the participants were asked whether their opinions had changed. Then, they read a more detailed account of each study's procedure and had to rate whether the research was well-conducted and convincing.[24] In fact, the studies were fictional. Half the participants were told that one kind of study supported the deterrent effect and the other undermined it, while for other participants the conclusions were swapped.[24][25]

The participants, whether supporters or opponents, reported shifting their attitudes slightly in the direction of the first study they read. Once they read the more detailed descriptions of the two studies, they almost all returned to their original belief regardless of the evidence provided, pointing to details that supported their viewpoint and disregarding anything contrary. Participants described studies supporting their pre-existing view as superior to those that contradicted it, in detailed and specific ways.[24][26] Writing about a study that seemed to undermine the deterrence effect, a death penalty proponent wrote, "The research didn't cover a long enough period of time", while an opponent's comment on the same study said, "No strong evidence to contradict the researchers has been presented".[24] The results illustrated that people set higher standards of evidence for hypotheses that go against their current expectations. This effect, known as "disconfirmation bias", has been supported by other experiments.[27]

Another study of biased interpretation occurred during the 2004 US presidential election and involved participants who reported having strong feelings about the candidates. They were shown apparently contradictory pairs of statements, either from Republican candidate George W. Bush, Democratic candidate John Kerry or a politically neutral public figure. They were also given further statements that made the apparent contradiction seem reasonable. From these three pieces of information, they had to decide whether or not each individual's statements were inconsistent.[28]:1948 There were strong differences in these evaluations, with participants much more likely to interpret statements from the candidate they opposed as contradictory.[28]:1951

In this experiment, the participants made their judgments while in a magnetic resonance imaging (MRI) scanner which monitored their brain activity. As participants evaluated contradictory statements by their favored candidate, emotional centers of their brains were aroused. This did not happen with the statements by the other figures. The experimenters inferred that the different responses to the statements were not due to passive reasoning errors. Instead, the participants were actively reducing the cognitive dissonance induced by reading about their favored candidate's irrational or hypocritical behavior.[28][page needed]

Biases in belief interpretation are persistent, regardless of intelligence level. Participants in an experiment took the SAT test (a college admissions test used in the United States) to assess their intelligence levels. They then read information regarding safety concerns for vehicles, and the experimenters manipulated the national origin of the car. American participants provided their opinion if the car should be banned on a six-point scale, where one indicated "definitely yes" and six indicated "definitely no." Participants firstly evaluated if they would allow a dangerous German car on American streets and a dangerous American car on German streets. Participants believed that the dangerous German car on American streets should be banned more quickly than the dangerous American car on German streets. There was no difference among intelligence levels at the rate participants would ban a car.[21]

Biased interpretation is not restricted to emotionally significant topics. In another experiment, participants were told a story about a theft. They had to rate the evidential importance of statements arguing either for or against a particular character being responsible. When they hypothesized that character's guilt, they rated statements supporting that hypothesis as more important than conflicting statements.[29]

In one study, participants read a profile of a woman which described a mix of introverted and extroverted behaviors.[33] They later had to recall examples of her introversion and extroversion. One group was told this was to assess the woman for a job as a librarian, while a second group were told it was for a job in real estate sales. There was a significant difference between what these two groups recalled, with the "librarian" group recalling more examples of introversion and the "sales" groups recalling more extroverted behavior.[33] A selective memory effect has also been shown in experiments that manipulate the desirability of personality types.[31][34] In one of these, a group of participants were shown evidence that extroverted people are more successful than introverts. Another group were told the opposite. In a subsequent, apparently unrelated, study, they were asked to recall events from their lives in which they had been either introverted or extroverted. Each group of participants provided more memories connecting themselves with the more desirable personality type, and recalled those memories more quickly.[35]

Changes in emotional states can also influence memory recall.[36][37] Participants rated how they felt when they had first learned that O.J. Simpson had been acquitted of murder charges.[36] They described their emotional reactions and confidence regarding the verdict one week, two months, and one year after the trial. Results indicated that participants' assessments for Simpson's guilt changed over time. The more that participants' opinion of the verdict had changed, the less stable were the participant's memories regarding their initial emotional reactions. When participants recalled their initial emotional reactions two months and a year later, past appraisals closely resembled current appraisals of emotion. People demonstrate sizable myside bias when discussing their opinions on controversial topics.[21] Memory recall and construction of experiences undergo revision in relation to corresponding emotional states.

Myside bias has been shown to influence the accuracy of memory recall.[37] In an experiment, widows and widowers rated the intensity of their experienced grief six months and five years after the deaths of their spouses. Participants noted a higher experience of grief at six months rather than at five years. Yet, when the participants were asked after five years how they had felt six months after the death of their significant other, the intensity of grief participants recalled was highly correlated with their current level of grief. Individuals appear to utilize their current emotional states to analyze how they must have felt when experiencing past events.[36] Emotional memories are reconstructed by current emotional states.

One study showed how selective memory can maintain belief in extrasensory perception (ESP).[38] Believers and disbelievers were each shown descriptions of ESP experiments. Half of each group were told that the experimental results supported the existence of ESP, while the others were told they did not. In a subsequent test, participants recalled the material accurately, apart from believers who had read the non-supportive evidence. This group remembered significantly less information and some of them incorrectly remembered the results as supporting ESP.[38]

A less abstract study was the Stanford biased interpretation experiment in which participants with strong opinions about the death penalty read about mixed experimental evidence. Twenty-three percent of the participants reported that their views had become more extreme, and this self-reported shift correlated strongly with their initial attitudes.[24] In later experiments, participants also reported their opinions becoming more extreme in response to ambiguous information. However, comparisons of their attitudes before and after the new evidence showed no significant change, suggesting that the self-reported changes might not be real.[27][39][41] Based on these experiments, Deanna Kuhn and Joseph Lao concluded that polarization is a real phenomenon but far from inevitable, only happening in a small minority of cases. They found that it was prompted not only by considering mixed evidence, but by merely thinking about the topic.[39]

Charles Taber and Milton Lodge argued that the Stanford team's result had been hard to replicate because the arguments used in later experiments were too abstract or confusing to evoke an emotional response. The Taber and Lodge study used the emotionally charged topics of gun control and affirmative action.[27] They measured the attitudes of their participants towards these issues before and after reading arguments on each side of the debate. Two groups of participants showed attitude polarization: those with strong prior opinions and those who were politically knowledgeable. In part of this study, participants chose which information sources to read, from a list prepared by the experimenters. For example they could read the National Rifle Association's and the Brady Anti-Handgun Coalition's arguments on gun control. Even when instructed to be even-handed, participants were more likely to read arguments that supported their existing attitudes than arguments that did not. This biased search for information correlated well with the polarization effect.[27]

The "backfire effect" is a name for the finding that, given evidence against their beliefs, people can reject the evidence and believe even more strongly.[42][43] The phrase was first coined by Brendan Nyhan and Jason Reifler.[44]

Confirmation biases can be used to explain why some beliefs persist when the initial evidence for them is removed.[46] This belief perseverance effect has been shown by a series of experiments using what is called the "debriefing paradigm": participants read fake evidence for a hypothesis, their attitude change is measured, then the fakery is exposed in detail. Their attitudes are then measured once more to see if their belief returns to its previous level.[45]

A common finding is that at least some of the initial belief remains even after a full debrief.[47] In one experiment, participants had to distinguish between real and fake suicide notes. The feedback was random: some were told they had done well while others were told they had performed badly. Even after being fully debriefed, participants were still influenced by the feedback. They still thought they were better or worse than average at that kind of task, depending on what they had initially been told.[48]

In another study, participants read job performance ratings of two firefighters, along with their responses to a risk aversion test.[45] This fictional data was arranged to show either a negative or positive association: some participants were told that a risk-taking firefighter did better, while others were told they did less well than a risk-averse colleague.[49] Even if these two case studies were true, they would have been scientifically poor evidence for a conclusion about firefighters in general. However, the participants found them subjectively persuasive.[49] When the case studies were shown to be fictional, participants' belief in a link diminished, but around half of the original effect remained.[45] Follow-up interviews established that the participants had understood the debriefing and taken it seriously. Participants seemed to trust the debriefing, but regarded the discredited information as irrelevant to their personal belief.[49]

One demonstration of irrational primacy used colored chips supposedly drawn from two urns. Participants were told the color distributions of the urns, and had to estimate the probability of a chip being drawn from one of them.[50] In fact, the colors appeared in a pre-arranged order. The first thirty draws favored one urn and the next thirty favored the other.[46] The series as a whole was neutral, so rationally, the two urns were equally likely. However, after sixty draws, participants favored the urn suggested by the initial thirty.[50]

Another experiment involved a slide show of a single object, seen as just a blur at first and in slightly better focus with each succeeding slide.[50] After each slide, participants had to state their best guess of what the object was. Participants whose early guesses were wrong persisted with those guesses, even when the picture was sufficiently in focus that the object was readily recognizable to other people.[46]

Another study recorded the symptoms experienced by arthritic patients, along with weather conditions over a 15-month period. Nearly all the patients reported that their pains were correlated with weather conditions, although the real correlation was zero.[53]

This effect is a kind of biased interpretation, in that objectively neutral or unfavorable evidence is interpreted to support existing beliefs. It is also related to biases in hypothesis-testing behavior.[54] In judging whether two events, such as illness and bad weather, are correlated, people rely heavily on the number of positive-positive cases: in this example, instances of both pain and bad weather. They pay relatively little attention to the other kinds of observation (of no pain and/or good weather).[55] This parallels the reliance on positive tests in hypothesis testing.[54] It may also reflect selective recall, in that people may have a sense that two events are correlated because it is easier to recall times when they happened together.[54]

A study has found individual differences in myside bias. This study investigates individual differences that are acquired through learning in a cultural context and are mutable. The researcher found important individual difference in argumentation. Studies have suggested that individual differences such as deductive reasoning ability, ability to overcome belief bias, epistemological understanding, and thinking disposition are a significant predictors of the reasoning and generating arguments, counterarguments, and rebuttals.[59][60][61]

A study by Christopher Wolfe and Anne Britt also investigated how participants' views of "what makes a good argument?" can be a source of myside bias that influence the way a person creates their own arguments.[58] The study investigated individual differences of argumentation schema and asked participants to write essays. The participants were randomly assigned to write essays either for or against their side of the argument they preferred and given balanced or unrestricted research instructions. The balanced research instructions instructed participants to create a balanced argument that included both pros and cons and the unrestricted research instruction did not give any particular instructions on how to create the argument.[58]

Overall, the results revealed that balance research instruction significantly increased the use of participants adding opposing information to their argument. These data also reveal that personal belief is not a source of myside bias. Furthermore, participants who believed that good arguments were based on facts were more likely to exhibit myside bias than participants who did not agree with this statement. This evidence is consistent with the claims proposed in Baron's article that people's opinions about good thinking can influence how arguments are generated.[58]

While the actual rule was simply "any ascending sequence", the participants had a great deal of difficulty in finding it, often announcing rules that were far more specific, such as "the middle number is the average of the first and last".[68] The participants seemed to test only positive examples—triples that obeyed their hypothesized rule. For example, if they thought the rule was, "Each number is two greater than its predecessor", they would offer a triple that fit this rule, such as (11,13,15) rather than a triple that violates it, such as (11,12,19).[70]

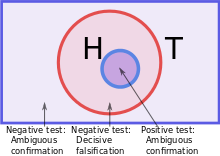

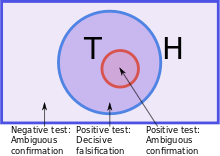

Wason accepted falsificationism, according to which a scientific test of a hypothesis is a serious attempt to falsify it. He interpreted his results as showing a preference for confirmation over falsification, hence the term "confirmation bias".[Note 4][71] Wason also used confirmation bias to explain the results of his selection task experiment.[72] In this task, participants are given partial information about a set of objects, and have to specify what further information they would need to tell whether or not a conditional rule ("If A, then B") applies. It has been found repeatedly that people perform badly on various forms of this test, in most cases ignoring information that could potentially refute the rule.[73][74]

In light of this and other critiques, the focus of research moved away from confirmation versus falsification to examine whether people test hypotheses in an informative way, or an uninformative but positive way. The search for "true" confirmation bias led psychologists to look at a wider range of effects in how people process information.[78]

Cognitive explanations for confirmation bias are based on limitations in people's ability to handle complex tasks, and the shortcuts, called heuristics, that they use.[81] For example, people may judge the reliability of evidence by using the availability heuristic—i.e., how readily a particular idea comes to mind.[82] It is also possible that people can only focus on one thought at a time, so find it difficult to test alternative hypotheses in parallel.[83] Another heuristic is the positive test strategy identified by Klayman and Ha, in which people test a hypothesis by examining cases where they expect a property or event to occur. This heuristic avoids the difficult or impossible task of working out how diagnostic each possible question will be. However, it is not universally reliable, so people can overlook challenges to their existing beliefs.[13][84]

Motivational explanations involve an effect of desire on belief, sometimes called "wishful thinking".[85][86] It is known that people prefer pleasant thoughts over unpleasant ones in a number of ways: this is called the "Pollyanna principle".[87] Applied to arguments or sources of evidence, this could explain why desired conclusions are more likely to be believed true.[85] According to experiments that manipulate the desirability of the conclusion, people demand a high standard of evidence for unpalatable ideas and a low standard for preferred ideas. In other words, they ask, "Can I believe this?" for some suggestions and, "Must I believe this?" for others.[88][89] Although consistency is a desirable feature of attitudes, an excessive drive for consistency is another potential source of bias because it may prevent people from neutrally evaluating new, surprising information.[85] Social psychologist Ziva Kunda combines the cognitive and motivational theories, arguing that motivation creates the bias, but cognitive factors determine the size of the effect.[90]

Explanations in terms of cost-benefit analysis assume that people do not just test hypotheses in a disinterested way, but assess the costs of different errors.[91] Using ideas from evolutionary psychology, James Friedrich suggests that people do not primarily aim at truth in testing hypotheses, but try to avoid the most costly errors. For example, employers might ask one-sided questions in job interviews because they are focused on weeding out unsuitable candidates.[92] Yaacov Trope and Akiva Liberman's refinement of this theory assumes that people compare the two different kinds of error: accepting a false hypothesis or rejecting a true hypothesis. For instance, someone who underestimates a friend's honesty might treat him or her suspiciously and so undermine the friendship. Overestimating the friend's honesty may also be costly, but less so. In this case, it would be rational to seek, evaluate or remember evidence of their honesty in a biased way.[93] When someone gives an initial impression of being introverted or extroverted, questions that match that impression come across as more empathic.[94] This suggests that when talking to someone who seems to be an introvert, it is a sign of better social skills to ask, "Do you feel awkward in social situations?" rather than, "Do you like noisy parties?" The connection between confirmation bias and social skills was corroborated by a study of how college students get to know other people. Highly self-monitoring students, who are more sensitive to their environment and to social norms, asked more matching questions when interviewing a high-status staff member than when getting to know fellow students.[94]

Psychologists Jennifer Lerner and Philip Tetlock distinguish two different kinds of thinking process. Exploratory thought neutrally considers multiple points of view and tries to anticipate all possible objections to a particular position, while confirmatory thought seeks to justify a specific point of view. Lerner and Tetlock say that when people expect to justify their position to others whose views they already know, they will tend to adopt a similar position to those people, and then use confirmatory thought to bolster their own credibility. However, if the external parties are overly aggressive or critical, people will disengage from thought altogether, and simply assert their personal opinions without justification.[95] Lerner and Tetlock say that people only push themselves to think critically and logically when they know in advance they will need to explain themselves to others who are well-informed, genuinely interested in the truth, and whose views they don't already know.[96] Because those conditions rarely exist, they argue, most people are using confirmatory thought most of the time.[97]

Cognitive therapy was developed by Aaron T. Beck in the early 1960s and has become a popular approach.[105] According to Beck, biased information processing is a factor in depression.[106] His approach teaches people to treat evidence impartially, rather than selectively reinforcing negative outlooks.[64] Phobias and hypochondria have also been shown to involve confirmation bias for threatening information.[107]

Nickerson argues that reasoning in judicial and political contexts is sometimes subconsciously biased, favoring conclusions that judges, juries or governments have already committed to.[108] Since the evidence in a jury trial can be complex, and jurors often reach decisions about the verdict early on, it is reasonable to expect an attitude polarization effect. The prediction that jurors will become more extreme in their views as they see more evidence has been borne out in experiments with mock trials.[109][110] Both inquisitorial and adversarial criminal justice systems are affected by confirmation bias.[111]

Confirmation bias can be a factor in creating or extending conflicts, from emotionally charged debates to wars: by interpreting the evidence in their favor, each opposing party can become overconfident that it is in the stronger position.[112] On the other hand, confirmation bias can result in people ignoring or misinterpreting the signs of an imminent or incipient conflict. For example, psychologists Stuart Sutherland and Thomas Kida have each argued that US Admiral Husband E. Kimmel showed confirmation bias when playing down the first signs of the Japanese attack on Pearl Harbor.[73][113]

A two-decade study of political pundits by Philip E. Tetlock found that, on the whole, their predictions were not much better than chance. Tetlock divided experts into "foxes" who maintained multiple hypotheses, and "hedgehogs" who were more dogmatic. In general, the hedgehogs were much less accurate. Tetlock blamed their failure on confirmation bias—specifically, their inability to make use of new information that contradicted their existing theories.[114]

In the 2013 murder trial of David Camm, the defense argued that Camm was charged for the murders of his wife and two children solely because of confirmation bias within the investigation.[115] Camm was arrested three days after the murders on the basis of faulty evidence. Despite the discovery that almost every piece of evidence on the probable cause affidavit was inaccurate or unreliable, the charges were not dropped against him.[116][117] A sweatshirt found at the crime was subsequently discovered to contain the DNA of a convicted felon, his prison nickname, and his department of corrections number.[118] Investigators looked for Camm's DNA on the sweatshirt, but failed to investigate any other pieces of evidence found on it and the foreign DNA was not run through CODIS until 5 years after the crime.[119][120] When the second suspect was discovered, prosecutors charged them as co-conspirators in the crime despite finding no evidence linking the two men.[121][122] Camm was acquitted of the murders.[123]

As a striking illustration of confirmation bias in the real world, Nickerson mentions numerological pyramidology: the practice of finding meaning in the proportions of the Egyptian pyramids.[126] There are many different length measurements that can be made of, for example, the Great Pyramid of Giza and many ways to combine or manipulate them. Hence it is almost inevitable that people who look at these numbers selectively will find superficially impressive correspondences, for example with the dimensions of the Earth.[126]

In the context of scientific research, confirmation biases can sustain theories or research programs in the face of inadequate or even contradictory evidence;[73][130] the field of parapsychology has been particularly affected.[131]

An experimenter's confirmation bias can potentially affect which data are reported. Data that conflict with the experimenter's expectations may be more readily discarded as unreliable, producing the so-called file drawer effect. To combat this tendency, scientific training teaches ways to prevent bias.[132] For example, experimental design of randomized controlled trials (coupled with their systematic review) aims to minimize sources of bias.[132][133] The social process of peer review is thought to mitigate the effect of individual scientists' biases,[134] even though the peer review process itself may be susceptible to such biases.[129][135] Confirmation bias may thus be especially harmful to objective evaluations regarding nonconforming results since biased individuals may regard opposing evidence to be weak in principle and give little serious thought to revising their beliefs.[128] Scientific innovators often meet with resistance from the scientific community, and research presenting controversial results frequently receives harsh peer review.[136]

A series of experiments in the 1960s suggested that people are biased toward confirming their existing beliefs. Later work re-interpreted these results as a tendency to test ideas in a one-sided way, focusing on one possibility and ignoring alternatives. In certain situations, this tendency can bias people's conclusions. Explanations for the observed biases include wishful thinking and the limited human capacity to process information. Another explanation is that people show confirmation bias because they are weighing up the costs of being wrong, rather than investigating in a neutral, scientific way.

Confirmation biases contribute to overconfidence in personal beliefs and can maintain or strengthen beliefs in the face of contrary evidence. Poor decisions due to these biases have been found in political and organizational contexts.[2][3][Note 2]

Contents

Types[edit]

Confirmation biases are effects in information processing. They differ from what is sometimes called the behavioral confirmation effect, commonly known as self-fulfilling prophecy, in which a person's expectations influence their own behavior, bringing about the expected result.[4]Some psychologists restrict the term confirmation bias to selective collection of evidence that supports what one already believes while ignoring or rejecting evidence that supports a different conclusion. Other psychologists apply the term more broadly to the tendency to preserve one's existing beliefs when searching for evidence, interpreting it, or recalling it from memory.[5][Note 3]

Biased search for information[edit]

Confirmation bias has been described as an internal "yes man", echoing back a person's beliefs like Charles Dickens' character Uriah Heep.[6]

The preference for positive tests in itself is not a bias, since positive tests can be highly informative.[13] However, in combination with other effects, this strategy can confirm existing beliefs or assumptions, independently of whether they are true.[14] In real-world situations, evidence is often complex and mixed. For example, various contradictory ideas about someone could each be supported by concentrating on one aspect of his or her behavior.[8] Thus any search for evidence in favor of a hypothesis is likely to succeed.[14] One illustration of this is the way the phrasing of a question can significantly change the answer.[8] For example, people who are asked, "Are you happy with your social life?" report greater satisfaction than those asked, "Are you unhappy with your social life?"[15]

Even a small change in a question's wording can affect how people search through available information, and hence the conclusions they reach. This was shown using a fictional child custody case.[16] Participants read that Parent A was moderately suitable to be the guardian in multiple ways. Parent B had a mix of salient positive and negative qualities: a close relationship with the child but a job that would take him or her away for long periods of time. When asked, "Which parent should have custody of the child?" the majority of participants chose Parent B, looking mainly for positive attributes. However, when asked, "Which parent should be denied custody of the child?" they looked for negative attributes and the majority answered that Parent B should be denied custody, implying that Parent A should have custody.[16]

Similar studies have demonstrated how people engage in a biased search for information, but also that this phenomenon may be limited by a preference for genuine diagnostic tests. In an initial experiment, participants rated another person on the introversion–extroversion personality dimension on the basis of an interview. They chose the interview questions from a given list. When the interviewee was introduced as an introvert, the participants chose questions that presumed introversion, such as, "What do you find unpleasant about noisy parties?" When the interviewee was described as extroverted, almost all the questions presumed extroversion, such as, "What would you do to liven up a dull party?" These loaded questions gave the interviewees little or no opportunity to falsify the hypothesis about them.[17] A later version of the experiment gave the participants less presumptive questions to choose from, such as, "Do you shy away from social interactions?"[18] Participants preferred to ask these more diagnostic questions, showing only a weak bias towards positive tests. This pattern, of a main preference for diagnostic tests and a weaker preference for positive tests, has been replicated in other studies.[18]

Personality traits influence and interact with biased search processes.[19] Individuals vary in their abilities to defend their attitudes from external attacks in relation to selective exposure. Selective exposure occurs when individuals search for information that is consistent, rather than inconsistent, with their personal beliefs.[20] An experiment examined the extent to which individuals could refute arguments that contradicted their personal beliefs.[19] People with high confidence levels more readily seek out contradictory information to their personal position to form an argument. Individuals with low confidence levels do not seek out contradictory information and prefer information that supports their personal position. People generate and evaluate evidence in arguments that are biased towards their own beliefs and opinions.[21] Heightened confidence levels decrease preference for information that supports individuals' personal beliefs.

Another experiment gave participants a complex rule-discovery task that involved moving objects simulated by a computer.[22] Objects on the computer screen followed specific laws, which the participants had to figure out. So, participants could "fire" objects across the screen to test their hypotheses. Despite making many attempts over a ten-hour session, none of the participants figured out the rules of the system. They typically attempted to confirm rather than falsify their hypotheses, and were reluctant to consider alternatives. Even after seeing objective evidence that refuted their working hypotheses, they frequently continued doing the same tests. Some of the participants were taught proper hypothesis-testing, but these instructions had almost no effect.[22]

Biased interpretation[edit]

Smart people believe weird things because they are skilled at defending beliefs they arrived at for non-smart reasons.

A team at Stanford University conducted an experiment involving participants who felt strongly about capital punishment, with half in favor and half against it.[24][25] Each participant read descriptions of two studies: a comparison of U.S. states with and without the death penalty, and a comparison of murder rates in a state before and after the introduction of the death penalty. After reading a quick description of each study, the participants were asked whether their opinions had changed. Then, they read a more detailed account of each study's procedure and had to rate whether the research was well-conducted and convincing.[24] In fact, the studies were fictional. Half the participants were told that one kind of study supported the deterrent effect and the other undermined it, while for other participants the conclusions were swapped.[24][25]

The participants, whether supporters or opponents, reported shifting their attitudes slightly in the direction of the first study they read. Once they read the more detailed descriptions of the two studies, they almost all returned to their original belief regardless of the evidence provided, pointing to details that supported their viewpoint and disregarding anything contrary. Participants described studies supporting their pre-existing view as superior to those that contradicted it, in detailed and specific ways.[24][26] Writing about a study that seemed to undermine the deterrence effect, a death penalty proponent wrote, "The research didn't cover a long enough period of time", while an opponent's comment on the same study said, "No strong evidence to contradict the researchers has been presented".[24] The results illustrated that people set higher standards of evidence for hypotheses that go against their current expectations. This effect, known as "disconfirmation bias", has been supported by other experiments.[27]

In this experiment, the participants made their judgments while in a magnetic resonance imaging (MRI) scanner which monitored their brain activity. As participants evaluated contradictory statements by their favored candidate, emotional centers of their brains were aroused. This did not happen with the statements by the other figures. The experimenters inferred that the different responses to the statements were not due to passive reasoning errors. Instead, the participants were actively reducing the cognitive dissonance induced by reading about their favored candidate's irrational or hypocritical behavior.[28][page needed]

Biases in belief interpretation are persistent, regardless of intelligence level. Participants in an experiment took the SAT test (a college admissions test used in the United States) to assess their intelligence levels. They then read information regarding safety concerns for vehicles, and the experimenters manipulated the national origin of the car. American participants provided their opinion if the car should be banned on a six-point scale, where one indicated "definitely yes" and six indicated "definitely no." Participants firstly evaluated if they would allow a dangerous German car on American streets and a dangerous American car on German streets. Participants believed that the dangerous German car on American streets should be banned more quickly than the dangerous American car on German streets. There was no difference among intelligence levels at the rate participants would ban a car.[21]

Biased interpretation is not restricted to emotionally significant topics. In another experiment, participants were told a story about a theft. They had to rate the evidential importance of statements arguing either for or against a particular character being responsible. When they hypothesized that character's guilt, they rated statements supporting that hypothesis as more important than conflicting statements.[29]

Biased memory[edit]

Even if people gather and interpret evidence in a neutral manner, they may still remember it selectively to reinforce their expectations. This effect is called "selective recall", "confirmatory memory" or "access-biased memory".[30] Psychological theories differ in their predictions about selective recall. Schema theory predicts that information matching prior expectations will be more easily stored and recalled than information that does not match.[31] Some alternative approaches say that surprising information stands out and so is memorable.[31] Predictions from both these theories have been confirmed in different experimental contexts, with no theory winning outright.[32]In one study, participants read a profile of a woman which described a mix of introverted and extroverted behaviors.[33] They later had to recall examples of her introversion and extroversion. One group was told this was to assess the woman for a job as a librarian, while a second group were told it was for a job in real estate sales. There was a significant difference between what these two groups recalled, with the "librarian" group recalling more examples of introversion and the "sales" groups recalling more extroverted behavior.[33] A selective memory effect has also been shown in experiments that manipulate the desirability of personality types.[31][34] In one of these, a group of participants were shown evidence that extroverted people are more successful than introverts. Another group were told the opposite. In a subsequent, apparently unrelated, study, they were asked to recall events from their lives in which they had been either introverted or extroverted. Each group of participants provided more memories connecting themselves with the more desirable personality type, and recalled those memories more quickly.[35]

Changes in emotional states can also influence memory recall.[36][37] Participants rated how they felt when they had first learned that O.J. Simpson had been acquitted of murder charges.[36] They described their emotional reactions and confidence regarding the verdict one week, two months, and one year after the trial. Results indicated that participants' assessments for Simpson's guilt changed over time. The more that participants' opinion of the verdict had changed, the less stable were the participant's memories regarding their initial emotional reactions. When participants recalled their initial emotional reactions two months and a year later, past appraisals closely resembled current appraisals of emotion. People demonstrate sizable myside bias when discussing their opinions on controversial topics.[21] Memory recall and construction of experiences undergo revision in relation to corresponding emotional states.

Myside bias has been shown to influence the accuracy of memory recall.[37] In an experiment, widows and widowers rated the intensity of their experienced grief six months and five years after the deaths of their spouses. Participants noted a higher experience of grief at six months rather than at five years. Yet, when the participants were asked after five years how they had felt six months after the death of their significant other, the intensity of grief participants recalled was highly correlated with their current level of grief. Individuals appear to utilize their current emotional states to analyze how they must have felt when experiencing past events.[36] Emotional memories are reconstructed by current emotional states.

One study showed how selective memory can maintain belief in extrasensory perception (ESP).[38] Believers and disbelievers were each shown descriptions of ESP experiments. Half of each group were told that the experimental results supported the existence of ESP, while the others were told they did not. In a subsequent test, participants recalled the material accurately, apart from believers who had read the non-supportive evidence. This group remembered significantly less information and some of them incorrectly remembered the results as supporting ESP.[38]

Related effects[edit]

Polarization of opinion[edit]

Main article: Attitude polarization

When people with opposing views interpret new information in a biased way, their views can move even further apart. This is called "attitude polarization".[39] The effect was demonstrated by an experiment that involved drawing a series of red and black balls from one of two concealed "bingo baskets". Participants knew that one basket contained 60% black and 40% red balls; the other, 40% black and 60% red. The experimenters looked at what happened when balls of alternating color were drawn in turn, a sequence that does not favor either basket. After each ball was drawn, participants in one group were asked to state out loud their judgments of the probability that the balls were being drawn from one or the other basket. These participants tended to grow more confident with each successive draw—whether they initially thought the basket with 60% black balls or the one with 60% red balls was the more likely source, their estimate of the probability increased. Another group of participants were asked to state probability estimates only at the end of a sequence of drawn balls, rather than after each ball. They did not show the polarization effect, suggesting that it does not necessarily occur when people simply hold opposing positions, but rather when they openly commit to them.[40]A less abstract study was the Stanford biased interpretation experiment in which participants with strong opinions about the death penalty read about mixed experimental evidence. Twenty-three percent of the participants reported that their views had become more extreme, and this self-reported shift correlated strongly with their initial attitudes.[24] In later experiments, participants also reported their opinions becoming more extreme in response to ambiguous information. However, comparisons of their attitudes before and after the new evidence showed no significant change, suggesting that the self-reported changes might not be real.[27][39][41] Based on these experiments, Deanna Kuhn and Joseph Lao concluded that polarization is a real phenomenon but far from inevitable, only happening in a small minority of cases. They found that it was prompted not only by considering mixed evidence, but by merely thinking about the topic.[39]

Charles Taber and Milton Lodge argued that the Stanford team's result had been hard to replicate because the arguments used in later experiments were too abstract or confusing to evoke an emotional response. The Taber and Lodge study used the emotionally charged topics of gun control and affirmative action.[27] They measured the attitudes of their participants towards these issues before and after reading arguments on each side of the debate. Two groups of participants showed attitude polarization: those with strong prior opinions and those who were politically knowledgeable. In part of this study, participants chose which information sources to read, from a list prepared by the experimenters. For example they could read the National Rifle Association's and the Brady Anti-Handgun Coalition's arguments on gun control. Even when instructed to be even-handed, participants were more likely to read arguments that supported their existing attitudes than arguments that did not. This biased search for information correlated well with the polarization effect.[27]

The "backfire effect" is a name for the finding that, given evidence against their beliefs, people can reject the evidence and believe even more strongly.[42][43] The phrase was first coined by Brendan Nyhan and Jason Reifler.[44]

Persistence of discredited beliefs[edit]

[B]eliefs can survive potent logical or empirical challenges. They can survive and even be bolstered by evidence that most uncommitted observers would agree logically demands some weakening of such beliefs. They can even survive the total destruction of their original evidential bases.

—Lee Ross and Craig Anderson[45]

A common finding is that at least some of the initial belief remains even after a full debrief.[47] In one experiment, participants had to distinguish between real and fake suicide notes. The feedback was random: some were told they had done well while others were told they had performed badly. Even after being fully debriefed, participants were still influenced by the feedback. They still thought they were better or worse than average at that kind of task, depending on what they had initially been told.[48]

In another study, participants read job performance ratings of two firefighters, along with their responses to a risk aversion test.[45] This fictional data was arranged to show either a negative or positive association: some participants were told that a risk-taking firefighter did better, while others were told they did less well than a risk-averse colleague.[49] Even if these two case studies were true, they would have been scientifically poor evidence for a conclusion about firefighters in general. However, the participants found them subjectively persuasive.[49] When the case studies were shown to be fictional, participants' belief in a link diminished, but around half of the original effect remained.[45] Follow-up interviews established that the participants had understood the debriefing and taken it seriously. Participants seemed to trust the debriefing, but regarded the discredited information as irrelevant to their personal belief.[49]

Preference for early information[edit]

Experiments have shown that information is weighted more strongly when it appears early in a series, even when the order is unimportant. For example, people form a more positive impression of someone described as "intelligent, industrious, impulsive, critical, stubborn, envious" than when they are given the same words in reverse order.[50] This irrational primacy effect is independent of the primacy effect in memory in which the earlier items in a series leave a stronger memory trace.[50] Biased interpretation offers an explanation for this effect: seeing the initial evidence, people form a working hypothesis that affects how they interpret the rest of the information.[46]One demonstration of irrational primacy used colored chips supposedly drawn from two urns. Participants were told the color distributions of the urns, and had to estimate the probability of a chip being drawn from one of them.[50] In fact, the colors appeared in a pre-arranged order. The first thirty draws favored one urn and the next thirty favored the other.[46] The series as a whole was neutral, so rationally, the two urns were equally likely. However, after sixty draws, participants favored the urn suggested by the initial thirty.[50]

Another experiment involved a slide show of a single object, seen as just a blur at first and in slightly better focus with each succeeding slide.[50] After each slide, participants had to state their best guess of what the object was. Participants whose early guesses were wrong persisted with those guesses, even when the picture was sufficiently in focus that the object was readily recognizable to other people.[46]

Illusory association between events[edit]

Main article: Illusory correlation

Illusory correlation is the tendency to see non-existent correlations in a set of data.[51] This tendency was first demonstrated in a series of experiments in the late 1960s.[52] In one experiment, participants read a set of psychiatric case studies, including responses to the Rorschach inkblot test. The participants reported that the homosexual men in the set were more likely to report seeing buttocks, anuses or sexually ambiguous figures in the inkblots. In fact the case studies were fictional and, in one version of the experiment, had been constructed so that the homosexual men were less likely to report this imagery.[51] In a survey, a group of experienced psychoanalysts reported the same set of illusory associations with homosexuality.[51][52]Another study recorded the symptoms experienced by arthritic patients, along with weather conditions over a 15-month period. Nearly all the patients reported that their pains were correlated with weather conditions, although the real correlation was zero.[53]

This effect is a kind of biased interpretation, in that objectively neutral or unfavorable evidence is interpreted to support existing beliefs. It is also related to biases in hypothesis-testing behavior.[54] In judging whether two events, such as illness and bad weather, are correlated, people rely heavily on the number of positive-positive cases: in this example, instances of both pain and bad weather. They pay relatively little attention to the other kinds of observation (of no pain and/or good weather).[55] This parallels the reliance on positive tests in hypothesis testing.[54] It may also reflect selective recall, in that people may have a sense that two events are correlated because it is easier to recall times when they happened together.[54]

Individual differences[edit]

Myside bias was once believed to be associated with greater intelligence; however, studies have shown that myside bias can be more influenced by ability to rationally think as opposed to amount of intelligence.[56] Myside bias can cause an inability to effectively and logically evaluate the opposite side of an argument. Studies have stated that myside bias is an absence of "active open-mindedness," meaning the active search for why an initial idea may be wrong.[57] Typically, myside bias is operationalized in empirical studies as the quantity of evidence used in support of their side in comparison to the opposite side.[58]A study has found individual differences in myside bias. This study investigates individual differences that are acquired through learning in a cultural context and are mutable. The researcher found important individual difference in argumentation. Studies have suggested that individual differences such as deductive reasoning ability, ability to overcome belief bias, epistemological understanding, and thinking disposition are a significant predictors of the reasoning and generating arguments, counterarguments, and rebuttals.[59][60][61]

A study by Christopher Wolfe and Anne Britt also investigated how participants' views of "what makes a good argument?" can be a source of myside bias that influence the way a person creates their own arguments.[58] The study investigated individual differences of argumentation schema and asked participants to write essays. The participants were randomly assigned to write essays either for or against their side of the argument they preferred and given balanced or unrestricted research instructions. The balanced research instructions instructed participants to create a balanced argument that included both pros and cons and the unrestricted research instruction did not give any particular instructions on how to create the argument.[58]

Overall, the results revealed that balance research instruction significantly increased the use of participants adding opposing information to their argument. These data also reveal that personal belief is not a source of myside bias. Furthermore, participants who believed that good arguments were based on facts were more likely to exhibit myside bias than participants who did not agree with this statement. This evidence is consistent with the claims proposed in Baron's article that people's opinions about good thinking can influence how arguments are generated.[58]

History[edit]

Informal observation[edit]

Before psychological research on confirmation bias, the phenomenon had been observed anecdotally throughout history. Beginning with the Greek historian Thucydides (c. 460 BC – c. 395 BC), who wrote of misguided treason in The Peloponnesian War; "... for it is a habit of mankind to entrust to careless hope what they long for, and to use sovereign reason to thrust aside what they do not fancy."[62] Italian poet Dante Alighieri (1265–1321), noted it in his famous work, the Divine Comedy, in which St. Thomas Aquinas cautions Dante upon meeting in Paradise, "opinion—hasty—often can incline to the wrong side, and then affection for one's own opinion binds, confines the mind."[63] English philosopher and scientist Francis Bacon (1561–1626),[64] in the Novum Organum noted that biased assessment of evidence drove "all superstitions, whether in astrology, dreams, omens, divine judgments or the like".[65] He wrote;The human understanding when it has once adopted an opinion ... draws all things else to support and agree with it. And though there be a greater number and weight of instances to be found on the other side, yet these it either neglects or despises, or else by some distinction sets aside or rejects[.][65]In his essay "What Is Art?", Russian novelist Leo Tolstoy wrote,

I know that most men—not only those considered clever, but even those who are very clever, and capable of understanding most difficult scientific, mathematical, or philosophic problems—can very seldom discern even the simplest and most obvious truth if it be such as to oblige them to admit the falsity of conclusions they have formed, perhaps with much difficulty—conclusions of which they are proud, which they have taught to others, and on which they have built their lives.[66]

Wason's research on hypothesis-testing[edit]

The term "confirmation bias" was coined by English psychologist Peter Wason.[67] For an experiment published in 1960, he challenged participants to identify a rule applying to triples of numbers. At the outset, they were told that (2,4,6) fits the rule. Participants could generate their own triples and the experimenter told them whether or not each triple conformed to the rule.[68][69]While the actual rule was simply "any ascending sequence", the participants had a great deal of difficulty in finding it, often announcing rules that were far more specific, such as "the middle number is the average of the first and last".[68] The participants seemed to test only positive examples—triples that obeyed their hypothesized rule. For example, if they thought the rule was, "Each number is two greater than its predecessor", they would offer a triple that fit this rule, such as (11,13,15) rather than a triple that violates it, such as (11,12,19).[70]

Wason accepted falsificationism, according to which a scientific test of a hypothesis is a serious attempt to falsify it. He interpreted his results as showing a preference for confirmation over falsification, hence the term "confirmation bias".[Note 4][71] Wason also used confirmation bias to explain the results of his selection task experiment.[72] In this task, participants are given partial information about a set of objects, and have to specify what further information they would need to tell whether or not a conditional rule ("If A, then B") applies. It has been found repeatedly that people perform badly on various forms of this test, in most cases ignoring information that could potentially refute the rule.[73][74]

Klayman and Ha's critique[edit]

A 1987 paper by Joshua Klayman and Young-Won Ha argued that the Wason experiments had not actually demonstrated a bias towards confirmation. Instead, Klayman and Ha interpreted the results in terms of a tendency to make tests that are consistent with the working hypothesis.[75] They called this the "positive test strategy".[8] This strategy is an example of a heuristic: a reasoning shortcut that is imperfect but easy to compute.[1] Klayman and Ha used Bayesian probability and information theory as their standard of hypothesis-testing, rather than the falsificationism used by Wason. According to these ideas, each answer to a question yields a different amount of information, which depends on the person's prior beliefs. Thus a scientific test of a hypothesis is one that is expected to produce the most information. Since the information content depends on initial probabilities, a positive test can either be highly informative or uninformative. Klayman and Ha argued that when people think about realistic problems, they are looking for a specific answer with a small initial probability. In this case, positive tests are usually more informative than negative tests.[13] However, in Wason's rule discovery task the answer—three numbers in ascending order—is very broad, so positive tests are unlikely to yield informative answers. Klayman and Ha supported their analysis by citing an experiment that used the labels "DAX" and "MED" in place of "fits the rule" and "doesn't fit the rule". This avoided implying that the aim was to find a low-probability rule. Participants had much more success with this version of the experiment.[76][77]Explanations[edit]

Confirmation bias is often described as a result of automatic, unintentional strategies rather than deliberate deception.[14][79] According to Robert Maccoun, most biased evidence processing occurs through a combination of both "cold" (cognitive) and "hot" (motivated) mechanisms.[80]Cognitive explanations for confirmation bias are based on limitations in people's ability to handle complex tasks, and the shortcuts, called heuristics, that they use.[81] For example, people may judge the reliability of evidence by using the availability heuristic—i.e., how readily a particular idea comes to mind.[82] It is also possible that people can only focus on one thought at a time, so find it difficult to test alternative hypotheses in parallel.[83] Another heuristic is the positive test strategy identified by Klayman and Ha, in which people test a hypothesis by examining cases where they expect a property or event to occur. This heuristic avoids the difficult or impossible task of working out how diagnostic each possible question will be. However, it is not universally reliable, so people can overlook challenges to their existing beliefs.[13][84]

Motivational explanations involve an effect of desire on belief, sometimes called "wishful thinking".[85][86] It is known that people prefer pleasant thoughts over unpleasant ones in a number of ways: this is called the "Pollyanna principle".[87] Applied to arguments or sources of evidence, this could explain why desired conclusions are more likely to be believed true.[85] According to experiments that manipulate the desirability of the conclusion, people demand a high standard of evidence for unpalatable ideas and a low standard for preferred ideas. In other words, they ask, "Can I believe this?" for some suggestions and, "Must I believe this?" for others.[88][89] Although consistency is a desirable feature of attitudes, an excessive drive for consistency is another potential source of bias because it may prevent people from neutrally evaluating new, surprising information.[85] Social psychologist Ziva Kunda combines the cognitive and motivational theories, arguing that motivation creates the bias, but cognitive factors determine the size of the effect.[90]

Explanations in terms of cost-benefit analysis assume that people do not just test hypotheses in a disinterested way, but assess the costs of different errors.[91] Using ideas from evolutionary psychology, James Friedrich suggests that people do not primarily aim at truth in testing hypotheses, but try to avoid the most costly errors. For example, employers might ask one-sided questions in job interviews because they are focused on weeding out unsuitable candidates.[92] Yaacov Trope and Akiva Liberman's refinement of this theory assumes that people compare the two different kinds of error: accepting a false hypothesis or rejecting a true hypothesis. For instance, someone who underestimates a friend's honesty might treat him or her suspiciously and so undermine the friendship. Overestimating the friend's honesty may also be costly, but less so. In this case, it would be rational to seek, evaluate or remember evidence of their honesty in a biased way.[93] When someone gives an initial impression of being introverted or extroverted, questions that match that impression come across as more empathic.[94] This suggests that when talking to someone who seems to be an introvert, it is a sign of better social skills to ask, "Do you feel awkward in social situations?" rather than, "Do you like noisy parties?" The connection between confirmation bias and social skills was corroborated by a study of how college students get to know other people. Highly self-monitoring students, who are more sensitive to their environment and to social norms, asked more matching questions when interviewing a high-status staff member than when getting to know fellow students.[94]

Psychologists Jennifer Lerner and Philip Tetlock distinguish two different kinds of thinking process. Exploratory thought neutrally considers multiple points of view and tries to anticipate all possible objections to a particular position, while confirmatory thought seeks to justify a specific point of view. Lerner and Tetlock say that when people expect to justify their position to others whose views they already know, they will tend to adopt a similar position to those people, and then use confirmatory thought to bolster their own credibility. However, if the external parties are overly aggressive or critical, people will disengage from thought altogether, and simply assert their personal opinions without justification.[95] Lerner and Tetlock say that people only push themselves to think critically and logically when they know in advance they will need to explain themselves to others who are well-informed, genuinely interested in the truth, and whose views they don't already know.[96] Because those conditions rarely exist, they argue, most people are using confirmatory thought most of the time.[97]

Consequences[edit]

In finance[edit]

Confirmation bias can lead investors to be overconfident, ignoring evidence that their strategies will lose money.[6][98] In studies of political stock markets, investors made more profit when they resisted bias. For example, participants who interpreted a candidate's debate performance in a neutral rather than partisan way were more likely to profit.[99] To combat the effect of confirmation bias, investors can try to adopt a contrary viewpoint "for the sake of argument".[100] In one technique, they imagine that their investments have collapsed and ask themselves why this might happen.[6]In physical and mental health[edit]

Raymond Nickerson, a psychologist, blames confirmation bias for the ineffective medical procedures that were used for centuries before the arrival of scientific medicine.[101] If a patient recovered, medical authorities counted the treatment as successful, rather than looking for alternative explanations such as that the disease had run its natural course.[101] Biased assimilation is a factor in the modern appeal of alternative medicine, whose proponents are swayed by positive anecdotal evidence but treat scientific evidence hyper-critically.[102][103][104]Cognitive therapy was developed by Aaron T. Beck in the early 1960s and has become a popular approach.[105] According to Beck, biased information processing is a factor in depression.[106] His approach teaches people to treat evidence impartially, rather than selectively reinforcing negative outlooks.[64] Phobias and hypochondria have also been shown to involve confirmation bias for threatening information.[107]

In politics and law[edit]

Confirmation bias can be a factor in creating or extending conflicts, from emotionally charged debates to wars: by interpreting the evidence in their favor, each opposing party can become overconfident that it is in the stronger position.[112] On the other hand, confirmation bias can result in people ignoring or misinterpreting the signs of an imminent or incipient conflict. For example, psychologists Stuart Sutherland and Thomas Kida have each argued that US Admiral Husband E. Kimmel showed confirmation bias when playing down the first signs of the Japanese attack on Pearl Harbor.[73][113]

A two-decade study of political pundits by Philip E. Tetlock found that, on the whole, their predictions were not much better than chance. Tetlock divided experts into "foxes" who maintained multiple hypotheses, and "hedgehogs" who were more dogmatic. In general, the hedgehogs were much less accurate. Tetlock blamed their failure on confirmation bias—specifically, their inability to make use of new information that contradicted their existing theories.[114]

In the 2013 murder trial of David Camm, the defense argued that Camm was charged for the murders of his wife and two children solely because of confirmation bias within the investigation.[115] Camm was arrested three days after the murders on the basis of faulty evidence. Despite the discovery that almost every piece of evidence on the probable cause affidavit was inaccurate or unreliable, the charges were not dropped against him.[116][117] A sweatshirt found at the crime was subsequently discovered to contain the DNA of a convicted felon, his prison nickname, and his department of corrections number.[118] Investigators looked for Camm's DNA on the sweatshirt, but failed to investigate any other pieces of evidence found on it and the foreign DNA was not run through CODIS until 5 years after the crime.[119][120] When the second suspect was discovered, prosecutors charged them as co-conspirators in the crime despite finding no evidence linking the two men.[121][122] Camm was acquitted of the murders.[123]

In the paranormal[edit]

One factor in the appeal of alleged psychic readings is that listeners apply a confirmation bias which fits the psychic's statements to their own lives.[124] By making a large number of ambiguous statements in each sitting, the psychic gives the client more opportunities to find a match. This is one of the techniques of cold reading, with which a psychic can deliver a subjectively impressive reading without any prior information about the client.[124] Investigator James Randi compared the transcript of a reading to the client's report of what the psychic had said, and found that the client showed a strong selective recall of the "hits".[125]As a striking illustration of confirmation bias in the real world, Nickerson mentions numerological pyramidology: the practice of finding meaning in the proportions of the Egyptian pyramids.[126] There are many different length measurements that can be made of, for example, the Great Pyramid of Giza and many ways to combine or manipulate them. Hence it is almost inevitable that people who look at these numbers selectively will find superficially impressive correspondences, for example with the dimensions of the Earth.[126]

In science[edit]

A distinguishing feature of scientific thinking is the search for falsifying as well as confirming evidence.[127] However, many times in the history of science, scientists have resisted new discoveries by selectively interpreting or ignoring unfavorable data.[127] Previous research has shown that the assessment of the quality of scientific studies seems to be particularly vulnerable to confirmation bias. It has been found several times that scientists rate studies that report findings consistent with their prior beliefs more favorably than studies reporting findings inconsistent with their previous beliefs.[79][128][129] However, assuming that the research question is relevant, the experimental design adequate and the data are clearly and comprehensively described, the found results should be of importance to the scientific community and should not be viewed prejudicially, regardless of whether they conform to current theoretical predictions.[129]In the context of scientific research, confirmation biases can sustain theories or research programs in the face of inadequate or even contradictory evidence;[73][130] the field of parapsychology has been particularly affected.[131]

An experimenter's confirmation bias can potentially affect which data are reported. Data that conflict with the experimenter's expectations may be more readily discarded as unreliable, producing the so-called file drawer effect. To combat this tendency, scientific training teaches ways to prevent bias.[132] For example, experimental design of randomized controlled trials (coupled with their systematic review) aims to minimize sources of bias.[132][133] The social process of peer review is thought to mitigate the effect of individual scientists' biases,[134] even though the peer review process itself may be susceptible to such biases.[129][135] Confirmation bias may thus be especially harmful to objective evaluations regarding nonconforming results since biased individuals may regard opposing evidence to be weak in principle and give little serious thought to revising their beliefs.[128] Scientific innovators often meet with resistance from the scientific community, and research presenting controversial results frequently receives harsh peer review.[136]

In self-image[edit]